Chapter 7: AI Under the Hood

Overview

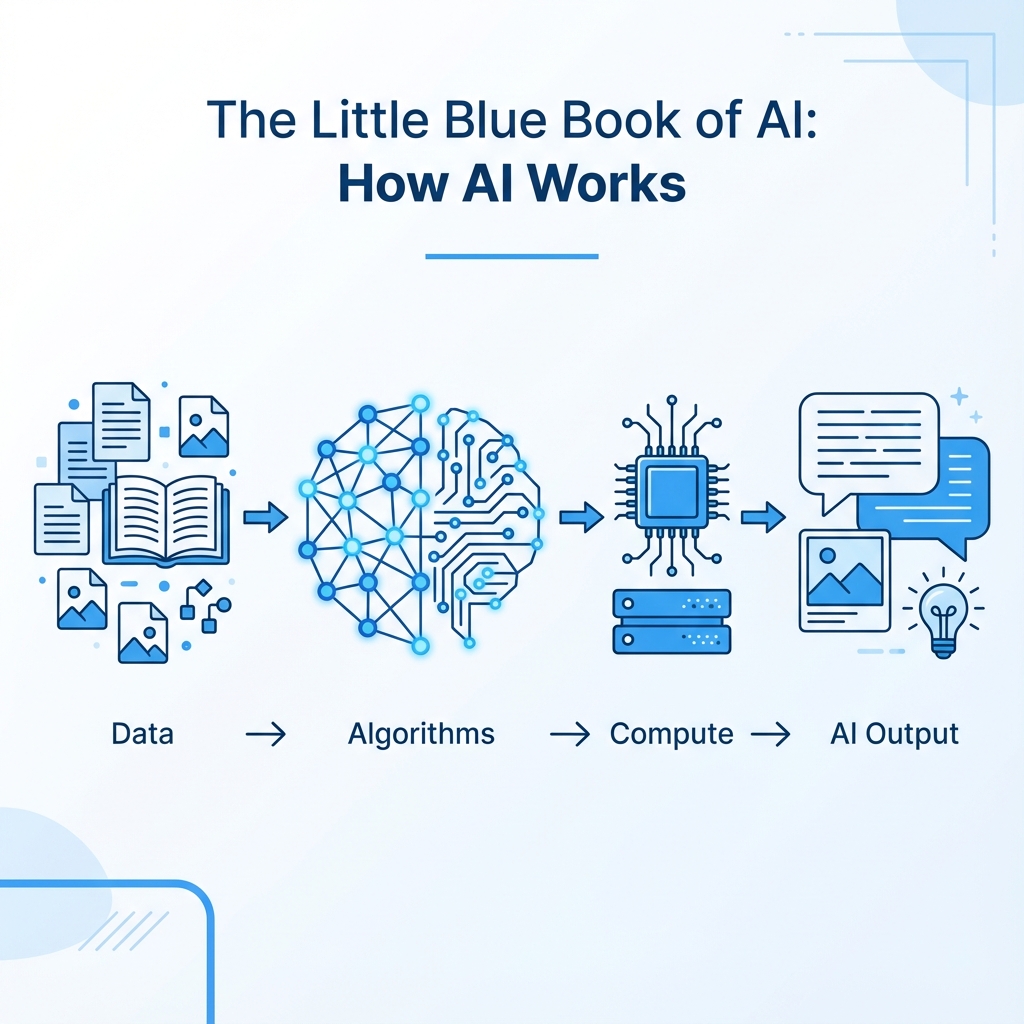

AI can feel mysterious from the outside, but underneath it is built from math, models, and computing hardware working together. This chapter breaks down the major components that make modern AI systems work.

Understanding these pieces doesn’t require deep technical knowledge — just a sense of how information flows through an AI system to produce the outputs you see.

How AI Systems Work

Neural Networks

Neural networks are computational structures inspired loosely by how neurons connect in the brain. They process information layer by layer, learning patterns over time.

Early neural networks had only a few layers. Today’s systems may have dozens or hundreds, enabling them to recognize complex patterns in text, images, or audio.

Neural networks are the foundation of nearly all modern AI — from voice assistants to recommendation algorithms to large language models.

Transformers

Transformers, introduced in 2017, revolutionized AI by allowing models to understand context across long sequences of text or data.

Unlike older neural networks that processed words one at a time, transformers use a mechanism called attention to look at entire passages at once. This allows them to capture meaning, relationships, and structure with far greater accuracy.

Transformers power today’s most capable systems — including ChatGPT, Claude, Gemini, and many multimodal models.

Models

An AI model is the learned mathematical structure that produces outputs — such as text, images, predictions, or classifications. It is created by training a neural network on large amounts of data.

Models adjust billions (or even trillions) of internal parameters during training. These parameters encode the patterns the model has learned.

Examples of models include:

- Large Language Models (LLMs)

- Vision models for image classification

- Audio models for speech recognition

- Multimodal models capable of text + image + audio understanding

Large Language Models (LLMs)

LLMs are a specific type of model trained on massive collections of text. They work by predicting the most likely next word (or token) in a sequence.

This simple mechanism, scaled up across huge datasets and enormous computing power, enables capabilities like conversation, summarization, translation, code generation, and reasoning.

Modern frontier LLMs can also work with images, audio, and video — making them multimodal.

Multimodal AI

Multimodal AI can understand and generate more than one type of data — such as text, images, audio, and video.

This unlocks abilities like:

- Describing images

- Interpreting charts

- Reading handwriting

- Turning sketches into designs

- Answering questions about videos

Multimodality brings AI closer to understanding the world the way humans do — through multiple senses working together.

Hardware: CPUs, GPUs, TPUs, and NPUs

AI systems run on specialized hardware designed for fast, parallel computation.

CPUs are general-purpose chips that handle everyday computing but are too slow for large-scale AI training.

GPUs (Graphics Processing Units) perform thousands of calculations simultaneously, making them ideal for training neural networks.

TPUs (Tensor Processing Units) and other custom chips are built specifically for AI workloads, accelerating matrix operations used in deep learning.

NPUs (Neural Processing Units) now ship inside laptops and smartphones, enabling on-device AI — faster, more private, and more efficient.

Power & Infrastructure

Modern AI requires enormous computing resources. Training large models means running thousands of GPUs or TPUs for weeks or months.

This consumes significant electricity and cooling, making data centers and energy efficiency key parts of the AI ecosystem.

AI is increasingly shaped by advances in infrastructure — from cloud clusters to distributed training to edge computing.

Autocomplete at Scale

At its core, a large language model is a sophisticated autocomplete engine. It predicts the next token based on everything it has learned during training.

This simple mechanism, scaled with billions of parameters and vast amounts of data, produces the complex behavior we associate with intelligent systems.

AI does not understand meaning the way humans do — but it can generate highly convincing patterns of language based on statistical likelihood.

Key Takeaway

AI’s capabilities come from the combination of neural networks, transformers, hardware acceleration, and massive training datasets. While AI may feel magical, it is ultimately a product of math, computation, and engineering.

Understanding these components helps demystify AI and gives you a clearer sense of how modern systems generate the outputs you see.